Aims and Ambitions, seeking a Simulation Manifesto

Pour yourself a glass of something inebriating, and settle in for a little bit. I have a story to tell you, that collectively humanity is creating a parallel universe through computation. In order to better understand this phenomenon, I created a community on reddit. Together we are learning everything we can about how science and software, and steamrolling towards early phases of Nick Bostrom’s simulation argument.

I know that might sound far fetched, but I would say that simulation and gaming as it exists today is akin to early biosynthesis on the primordial Earth. Computational reality as it currently exists will soon begin the next transition into early immersive holistic worlds. By that I mean truly open world experiences, not only undefined in terms of environment, but also there will be open-ended narratives and perpetual story. This will we be able to credit to developments in better artificial intelligence and a rise in atomized voxel environments.

More than just a revolution in entertainment, I believe that holistic parallel worlds will drive great long-term insight to the world’s youth. It will shape education for all ages, and teach tolerance by expanding global perspective. There also exists a huge potential to revolutionize labor through simulated optimizations, by mirroring the real world at full scale, resource planning becomes open ended too. Then, a peer-to-peer virtual marketplace could arise that sells experiences instead of directly selling goods. People could collaborate on cloud-hosted alternate realities, borrow “game” assets under contract, and then deliver immersive content to each other directly through browser.

I will touch on all of those subjects within the next month or two. Due to expansive nature of our community and our project, I’ve split this “Statement” into three posts. In this one I will discuss each of the fun projects that the reddit community collectively found. Then, explain how we’ve began to incorporate those key reference concepts into our own web experiment. This is our MetaSim framework, it’s a WebGL renderer with an API structure allowing many different reference servers to handle the generation of content and reference storage. Since we’re open source, we don’t want to run a project, we want to start a movement. In this article I attempt to capture the message of this movement, and hopefully win you to our cause! (We need all the help we can get!)

Please, check out our early phase pre-alpha below. It’s the start of the render-side of the content delivery platform!

Check out our WebGL project Mockup

Let’s roll back the clock and provide some context. Last September I wrote an article describing my proposal for the ideal ultimate strategy game. I knew that a consumer demand for such an experience was out there, but I was yet unaware of any developers attempting to tackle the challenge. The idea is simple, have a scope-less sandbox game with self-defining narratives. The implementation is the challenge, and even more difficult is figuring out how to finance a project of this magnitude. Which is why I feel that open source is the only way to progress. (There are a few open-source oriented economic models I’ve been considering for self-sustaining collaborative growth, but I leave that to a later post.)

So far, I have learned a tremendous about the technical challenges involved and the business objectives a project like this needs, but I still feel like this is only the beginning. Reaching out with my blog was an important first step, but I didn’t stop there. I realized the only way to make any progress on my concept would be to evangelize it through a social platform, to attract like minded tinkerers and dreamers.

Thus, I started the /r/Simulate sub-reddit to bring an audience and start a conversation. It worked! There are so many different intelligent people out there with similar vision! I really have to say thank you to all of the people who have helped me shape that community into something special. It’s a unique blend of programmers, “professionals,” students, gamers, and scientists; I couldn’t have asked for a better crowd! I’ve made many new friends and connections through my endeavors there, so many talented people! Aaron, Rex, Max, Francisco, Peter, Spencer, Ron, and all the reddit mods, I can’t thank you enough for the hard work you’ve already put into this project!

Starting Discussions, Building a Community

When I started the community I didn’t know if anything would actually happen, I certainly didn’t know if project of our own would start because of it. The goal initially was to get people thinking about how a project like this might work hypothetically. That didn’t last long. With so much interest, the only thing to do was to make an effort at creating this ourselves.

Users began to submit suggestions about how an environment might be setup, like this example about procedural life. What was really unique was to see non-programmers with Masters and PhD level education flocking to our community to make suggestions. This is precisely what we wanted to see. People started making suggestions about:

- Procedural Nature/Geology driven terrain

- AI Driven Simulated Economics

- Generational Warfare modeling

- Artificial Evolution

- Top-Down Subject Classification

- Downsides to probabilistic AI

This lead naturally the first round of discussion about which programming language(s) to use. It turned into a competition of sorts between a few different camps. C#, C++, JAVA, and LISP were at the center of the argument. Benchmarking was being considered ahead of application, and became distracted by new hardware systems like Parallela. As if bickering about language was not adding enough “churn,” there was also dispute about which version control tool to use.

To better understand the argument, I started talking about this concept to one of my programmer friends and he suggested the book Algorithms, Languages, Automata, & Compilers: A Practical Approach by Maxim Mozgovoy. Its a very rich text and takes a long time to understand; still I was able to take away the basic principles of automata. I had not yet realized that regular expressions and thus programming languages themselves functioned by means of “automata.” It also depicted to me how parse trees work and explains context free grammar. A ton of it went way over my head, but getting a look into how computer science fundamentally works seemed like a good place to start on a project like this.

A few weeks went by, and then a rational proposal came forward. Reddit user Maximus-thrax suggested a few key items on his wish-list as a coder. It may have taken some time to get there afterwards, but we listened. Here’s what he had to suggest:

- No huge monolithic chunk of code. It’s seriously daunting to even think about where to start on a huge code-base. The casual programmer will probably just give up at the start. One way to combat this is to dedicate large amounts of programmer time to writing excellent documentation. But keeping this up-to-date only puts serious strain on the programmers that are actually writing code – and we’d rather have then writing code, wouldn’t we?

- Use a common language, or the option to use many languages. If people don’t know the language, then again they have a high barrier to entry.

- Don’t use too many libraries, and make the build system super-easy. If I need to checklist 10 or more separate libs, and need to make sure they are the right version number and also wait for the right moment for the stars to align to compile your code, I probably won’t be joining your team.

- Make it multi-platform. And don’t just say it, do it. Your team doesn’t have a Mac user on the developers side? Probably your code will encounter some issues on the Mac.

- It must be open-source, although the actual license used can be debated elsewhere.

And his TL;DR: Break code into sub-tasks. Make sub-tasks totally independent. Coders can work on what they actually want to, and leverage the other parts. Make a set of tools, not the ultimate tool. Be language agnostic. Make it easy to contribute.

This started clicking a lot of gears for us, and eventually Aaron came up with the proposal for a common JSON format. A common data format though is only as good as the platform that can exchange the stack, which was the biggest concern. This worry would be carried through to later phases in our growth, but Aaron delivered an awesome solution! I’ll touch on that later in this post.

Early Research Phase

Apart from the discussion about how to architect a holistic product, we discussed the value add that could result from starting many small projects. Right away, we began to uncover droves of incredible information! University projects, professional 3D graphics groups, other semi-hobbyist groups like our own. There are tons of cool things to see, so I suggest you dig back in time on the sub-reddit and go through all of those interesting links!

One of the early sources that I found particularly mind opening was “Interactive Design of Urban Spaces using Geometrical and Behavioral Modeling – SIGGRAPH Asia 2009″, this was done by the group UrbanSim and it appears to now be incorporated into a product called Synthicity.

This is what got me to start to consider “reference boundaries” or “reference levels.” That is, a city might render an output of tons of buildings, but those buildings are all either pre-existing, or just a set of parameter permutations. You might conceivably have one information layer produce terrain and then another produce cities, then another does individual buildings or streets at the personal level.

This caused me to try and visualize how all phenomenological events fit together. In a very abstract sense, each element of reality can be discretized into a pattern of leveled monads and unit states that can create on-demand system sets. All of this would be based around the observer and could scale up in size based on that individual’s awareness or influence. Such an architecture would include both external objective phenomenon and internal subjective phenomenon, and everything else in between like language, ideas, and cultural identity.

For this I hastily wrote a crummy article. I tried to release the idea on December 21st, 2012 to cash in on the hype to draw attention to the project. It didn’t work, which is probably for the best; I really didn’t want to attract the “New Age” crowd, even though I used some of their own symbolism to personify different aspects of my model. The idea that I was trying to sell was that there nothing esoteric about reality, it’s simply a matter of layered unit operations creating complexity in each layer above.

Plus, if stochastically modeled, each complexity layer could be substantially compressed. Consider that you might simulate the actions of a whole country, but not of every person in that country. You instead use a probabilistic model to generate lower order agents as you “zoom in.” This would simply mean applying the concept of Level of Detail to a broader selection of “gameplay” or simulation. For example, maybe only the 100 most important people in relation to your character get rendered as fully autonomous agents and every other person is scripted at a very high level and treated as a group, not as individuals.

So, such a tiered system could cut computational constraints when rendering massive worlds. So we decided the best approach to start might be a bunch of smaller projects that could work together towards a broader conceptual whole. The prototypes model worked for Spore, so why not give it a chance! The other side to this was that if we could find other, open source projects, that would cut our workload down on the mini-project task at hand. We could just try to understand how these other projects work.

Agent-Based Applications & References

TravellerSim

One such endeavor that I had learned about years ago was “TravellerSim.” It’s a simple agent based routine that models the spread of human cities, and then the creation of trade routes between those cities. Each city then forms into a trade block based interactions of travelling agents in between those cities. At a high level, you’re not treating cities and simple “state” models as flat, fixed entities. Instead, you have an emergent automata based phenomenon that precipitates a higher order structural institution to emerge.

http://electricarchaeology.ca/2009/10/16/travellersim-growing-settlement-structures-and-territories-with-agent-based-modeling-full-text/

Noble Ape

TravellerSim is not the only attempt to create a computational agent model of human interaction. Another important reference we uncovered was the “Noble Ape” simulation by Tom Barbalet. These simulations attempt to give agent “apes” semantic knowledge of their surroundings and he is currently working on an identity feature that will allow self reference and landmark based naming conventions from within an agent language map. Watch the video below and check out the source code here.

Rave AI Agents

Then we came across a project by Tomas “Myownclone” Vymazal, whose work was to create an agent based simulation using his ActionScript3 based Rave AI system and is built in the Irlicht 3D engine. In his world, agents are farmers in tribes and live in towns. They collect food and resource, and then use path-tracing to find their way around the map. The AI behavior is stored as a finite state machine (FSM) which evolves via a genetic algorithm, such that the agents self-determine whether or not to fight for resources.

University of Birmingham Mesolithic Simulation

One of the few academic projects to actually place human agents in visualized survival situation is the U. of Birmingham Mesolithic Agent Project. The University website itself lacks in direct knowledge about how the simulation works or what the direct goals of the project were. However, with just 10 minutes of googling its possible to better understand the what and why a little better.

Vincent Gaffrey‘s Academia profile has several papers which describe the broader context of this research organization. This group is using agent based modeling to determine settlement patterns in ancient Doggerland, the ice age landmass connecting England to continental Europe. Whilst I cannot find the precise paper targeting this project, I can speculate that ABM might be useful in finding submerged Mesolithic artifacts. This collaboration has many other interesting articles regarding ABM and antiquity, I recommend Medieval military logistics: a case for distributed agent-based simulation.

Mesolithic Agent Modeling Architecture – U. of Birmingham

Terrain Driven Applications & References

Creation of procedural terrain from perlin noise is a very common project among procedural terrain generators. Random terrain generation is common practice among most scene editors. What is more difficult to find is procedural terrain generation, such that it replicates plate tectonics, geophysics, erosion, biosystems and other real world factors.

In terms of professional grade software, there are plenty of options. Common ones include Terragen, Vue, Bryce, but there are several more. Most of these are licensed only to buy not to develop from and the intent is generally passive rather than dynamic rendering. There are also dynamic game engines which allow for procedural content to be created, but licensing practices and talent required for those engines are constrained such that a large amount of capital is needed to get started.

There are plenty of professional projects generating terrain and universes in fully devoted environments, which I’ll mention later in the External Projects section. Primarily, we were instead drawn towards the projects which were terrain specific, where we could then add agent content on top.

CSIRO Tellus Code

Early on, one of our members, a veteran redditor told us about an Australian project to model terrain deformation resulting from water based erosion and other factors. This guy claimed to be a geology professor and former defense contractor, so I was excited to hear his recommendations. The software he recommended is called Tellus, rather than explain it myself I’ll use their introduction:

Tellus is a new 3D-parallel code based on particle-in-cell technique capable of simulating geomorphic and stratigraphic evolution. Tellus solves the shallow water equation to simulate various types of flow (open-channel, hyperpycnal, hypopycnal, debris flow) materialized by fluid particles on an unstructured grid. External forces such as sea-level fluctuation, vertical displacement, rainfall and river inflows can be imposed. A sediment transport criterion allows siliciclastic material to be eroded, transported and deposited. The code is capable of modeling compaction based on sediment loading.

The software is not publicly available yet, but that’s fine. Currently the project still requires some critical infrastructure development before we can start incorporating the output of software like Tellus, but we’ll get there. Landscape deformation will be absolutely critical in distinguishing a level of realism apart from standard noise models. Really, we need more geologically intensive reference engines to be built into the background of our simulations. For now, check out this showcase on what Tellus can do:

Atomontage

As a fairly new company, Atomontage is still in development. What they offer as a product is substantially unique over other game engines. They have a voxel based, physics driven terrain engine. This allows for terrain to be molded in real-time as the scene gets produced volumetrically. Not much is disclosed about how voxel data is stored, loaded, or retrieved based on proximity. Still, the results are a true work of art!

Miguel Cepero’s Procworld

Arguably, Miguel’s Cepero’s work is the most inspirational, educational and motivating project we have yet come across. I honestly believe his procedural worlds are more diverse and combinatoric than any of the commercial products on the market. Granted, he has started to port to Unity for better renders, but his underlying engine is something to slobber over. He maintains a routine blog in which his latest features are constantly being showcased. What he has created is nothing less than real-time smooth-voxel procedural planet with diverse vegetation and a whole host of compression methods.

I cannot stress enough how brilliant his work is, he has created an on-demand content which is stored in a way which is not memory intensive. (I wish he’d elaborate more on how.) His content loads in parallel from an octree cell, and only writes to the cell if a change is made. According the Q&A Miguel generously provided in our reddit comment thread, he uses 64 bit key cell identifiers, allowing him to create flat worlds up to 90 billion square miles! The whole thing is written in C++ as close to plain C as possible, with one domain specific language for architecture grammar. Level of detail is applied with a tiered 3D clipmap rings that are more concentrated around the location of the observer.

I could go on explaining how groundbreaking and kick-ass Miguel’s work is, but instead, you should just watch his videos for yourself and read his blog, you won’t be disappointed. His archives are extensive and cover a wide variety of topics. More than any other project, Miguel creates procedural content; it’s not just terrain, it’s trees, boulders, buildings, weather.

Red Blob Games

One of the better 2D Map Generators I’ve seen, is this project done by a former CS student at Stanford. It is very similar to the EUIII Map Generator by “Nom” in that it uses voronoi clustering to create polygons and then perform clustering and terrain overlays. He breaks these shapes into Nodes and Edges, and then uses Delaunay triangulation to determine paths between nodes or edgepoints.

From there, he uses either noise or a sign function to determine the coast lines. He “cheats” by defining the shape first and then creating an elevation map within that shape. Then uses edge-nodes and height map data to create the direction of water flow. Finally he calculates rivers, watersheds and biomes from that data.

Although being visually impressive, this algorithmic approach is working procedurally backwards, and as such it is limited in application. The maps produced could be very accurate depictions of volcanic islands, but might not scale up well to an entire continent. Both a demo and the source code are available.

World Box

Similar to the “Red Blob” project, this tiny simulator packs a lot of punch. World Box is a browser based simulation where simple terrain is generated, and the user’s clicks place a new settlement onto the island. Then, a path-finding algorithm connects each of these “cities” to its nearest neighbors. Then, if the player is feeling destructive he can wipe out sections of agents all at once. There’s no description about how this was made, but its a good example of a small experiment to try and emulate. Also, I spent hours toying with this! ![]()

PlaTec

Lauri Viitanen of the Helsinki Metropolia University of Applied Sciences wrote his thesis paper on the subject of creating procedurally generated terrain from plate tectonic motion. After defining plate boundaries, the system is allowed to evolve and drift.

Plates are assigned an amount of “crust,” when in collision, the plate with less crust will subduct. If the plate mass is close to equal, a “continental collision” occurs and the crust of the smaller plate transfers to the larger one. This in turn decelerates the collision event. Simultaneously, voids left during plate separation mimic ocean ridges as new crust gets added.

While there does not appear to be any further work by Lauri in this particular field, his project was open source and free to use. Below is video I captured of his code in action, despite the weird X, Y wrapping it is really fascinating to see the results. If applied to a more convincing render environment, I am certain it would blow away anything that can be done by Perlin noise alone.

Laying the Groundwork

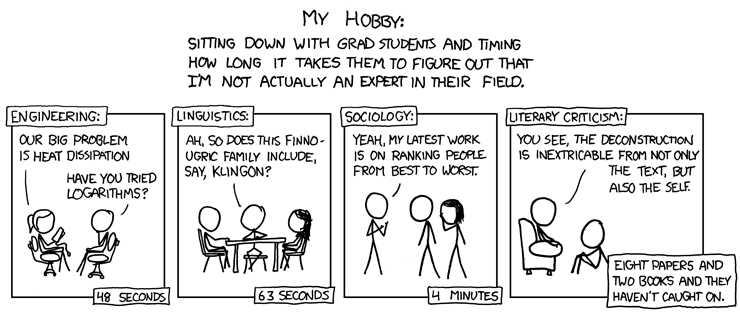

The research was beginning to seem exhaustive and fruitless. It is extraordinarily dissatisfying to just talk about the subject rather than participate in it. Alone I could not have progressed much further, but by creating the community I was able to attract the skills needed to get things started. Countless experts across many disciplines had a lot to suggest, but we needed to figure out just where to get started. I was beginning to feel a lot like the guy in this XKCD comic:

Aaron’s Processing Worldbuilder

I don’t think we would have made it much further if it were not for Aaron’s brilliant contributions appearing when they did. He surprised us with his own “Processing WorldBuilder,” which already fit the model for small “experiment” projects as a means to get started. This environment created continents/islands and then a placement algorithm started to determine human settlement locations. Being written in Processing this was quick to compile without complication. The code is not complex, but the results are stunning in comparison to other similar projects. Watching settlements spontaneously build themselves is a very cool thing to witness.

Then Aaron took things a step further and incorporated the methodology of PlaTec into the terrain generation mod he had already made. I had some trouble getting this to compile, but eventually this will be part of the methodology we use to create textures for Earth-like worlds. Again, I cannot stress enough how important Aaron’s contribution was. His posts and his work laid the foundations for future developments that would rock our world.

Compiling Other Projects

The first thing we did was try to compile some of the existing projects which had been shared so far. (Like HexPlanet, and the EU3 random map generator as discussed in my September post.) Of course, the source for most of those projects was either too obscure or too old to run without a massive amount of effort. This went on for about a week before we decided to just stop and start building for ourselves.

We had started by trying to set the scope of the “meta-project”, and of course then transitioned into the smaller projects framework. As we uncovered the aforementioned external micro-projects, a few members of our community started to share their own works and began to incorporate those into their own projects.

The awesome power of three.js

At about this time, we had been questioning deeply the path we had been taking. Which was to try an compile code that did not work. If we had been able to get parts of this code to work, it was suggested that we try and use the library Emscripten to push local rendering techniques to the browser.

That was a major decision that surfaced, to push our rendering into a browser environment. Why? Well why not, a browser based simulation has the ultimate portability and can be easily accessed without comprehensive software installation. It can offer a way to reach a broader audience than the hardcore PC gamer, it could potentially capture market share of the enormous casual gamer industry. Plus, certain web applications could later be ported to mobile with very less modification to the codebase. Totally worth a shot!

So our project was at a crossroad of sorts. On one hand we had the all of this programming base to try and decipher on the other hand we had swarms of people ready to aid in contribution to the cumulative idea of the project. The decision to try web rendering was worth it. Originally, we had been inspired by a few browser experiment projects that appeared.

Chief among those was the story told by RO.ME, which absolutely blew us away. Here was this narrative using multiple media formats to tell this beautiful story about an epic dream. Without having much buildup, I was unaware of how powerful web based rendering had become; also the whole plugin free aspect was the best part. I knew that Unity had a web enabled version, but RO.ME used a library written by “Mr. Doob” (Ricardo Cabello), called three.js. It opened our world up to the idea of having a full system and environment running in-browser! Just see for yourself!

What’s not to like about web based rendering? It’s hardware accelerated, no plugins, easy delivery. Sure there isn’t a game engine fully developed and ready to fly directly in browser… I see that as an opportunity instead of a challenge. Imagine never downloading a game client again, it’s just sign onto a website and play, in full immersive 3D, no problems. Mr. Doob and his collaborator’s work pushes the boundaries of WebGL, and it already has a massive payoff. I can only see good things to come from here!

Three.js has a ton of great demos available, take this one showing 100,000 stars for example or this post-processing viewed Earth model. Shaders, textures, polygon objects, animations, transformations, WebGL has it all. Apart from the demos featured on the three.js demo page, there were a few paramount projects that stood out in terms of terrain rendering.

Rob Chadwick’s Terrain Editor

One of the early WebGL experiments, this WebGL Terrain Editor renders a height field that can be modified from user interactions. WebGL here uses your GPU to do raytracing for atmospheric conditions, and uses algorithms to map textures to terrain normals. You can see the original chrome experiment page here. It was an eye-opener, it showed us that deskside applications wouldn’t be neccessary to output detailed terrain environments. Now play with it for yourself!

Project Windstorm

You can play directly with the project here, I am not porting this to an iframe because you really need the screenspace to take advantage of the beautiful UI which shows all of the render options, calculations, and a perspective frustum viewer.

Written by Stavros, a web developer from Greece, his project site Zephyros Anemos explains in detail how he built his application, from his Level of Detail (LOD) tileset render method, and the fetching algorithm that makes data requests based on the current perspecive. He applies a JPEG lightmap and a PNG to hold the heightmap. From this he decompresses the data, generates a quadtree, and generates a vertex map for the terrain.

WebGL GPU Landscaping and Erosion

Similar to Rob Chadwick’s editor, this project allows for responsive editing of WebGL terrain. This project was created by Swiss developer Florian Boesch of Codeflow.org. The difference is that Florian’s models have a procedural terrain editor built in, and it handles displacement mapping differently. He uses an isometric height field to improve shaders and curvature.

The WebGL terrain project is ported from his earlier OpenGL “Lithosphere” project, except that he added water and hydraulic erosion! It’s a really incredible application of geomorphic principles to terrain driven content. His site documents his work very well, but you can see the the live demo here, the source code here. (The embedded iframe of the live demo was problematic, so here’s some video of it in action):

Cesium WebGL Virtual Globe

Cesium has the most comprehensive WebGL map of the Earth. Created by Analytical Graphics, Inc (AGI) it’s the result of a defense consultant making a project for fun as a way to experiment in Web based graphics. What is great about their model is that it allows for GIS data to be animated live through their API. Vectors can be drawn and scripts can be written that allow for great demos and live script editing from their “sand castle” feature.

They already have build into their source all of the astrometry, orbital physics, map projects, a level of detail quadtree, vertex height maps, multiple projection map-views, they have a ton of great features! It’s almost tempting to fork from their project instead of using our own, except that we have a list of other needs which can not be addressed. Procedural generation of details at the FPS level, and a storage paradigm geared to that. We might mimic some of their libraries, but really to get to the detail we need, our own solution is needed. Here is their main demo, also, check out these pics of their best features:

VoxelWright

VoxelWright is a Minecraft clone build completely in three.js! The features are pretty straight-forward, voxel cubes which can be stacked. You can also write scripts from console to generate objects. It was also one of the first scenes I’ve seen that takes command of your mouse and offers to run at full screen. This simple set of features is what makes the experience feel more like a game or a simulator experience. Also, this got me to start thinking about the best libraries to use for an interface. Recently I’ve seen a proliferation of Bootstrap.js, which is a snappy responsive web frame. I’ll touch more on this in a later post. So check out the application itself, find them on their own subreddit, and here’s a quick video showing how to do scripting.

Our “MetaSim” Engine

After digging through so many different projects and creating a group of impassioned collaborators, Aaron put forward the first true codebase to form the basis of our simulation. This is the WebHexPlanet app which I showcased at the top of the post. After putting up the three.js scene, Aaron created an isocahedronal sphere planet, moon, a skybox and a lens flare sun. Thus, we were up and running! I textured the world with the ”Eternal War” planet texture I made, and thus we had a good start!

We spent the months ensuing tweaking and adding content in small bursts. Aaron found a way to add a scattering layer, and suddenly we had our very own pale blue dot! (Our last stable release). This was done using Rackup for webserver and then we’ve been hosting on Heroku.

Rex, Max and I started to abstract parts of the renderer and Aaron started working really hard on the API. Rex started working on a side project to do solar system formation estimates based on his particle collision modelling. Eventually though, we’ll have a server application that can model the proto-planetary disk of an early solar system so as to accurately create a set of realistic planetary systems. The API will pass this data to a reference engine, which in turn will feed the renderer.

We had a Google hangout in March, where Aaron explained his the first version of the API (below). Recently, he has documented his work in a blog format, and we’re both available regularly through the Reddit community.

Closing Remarks

This concludes part I of my update on the project, which has essentially been summarizing the updates of the last six months. However, there are several important subjects I’d like to address as separate posts. Mainly, we have not been the only project with similar ambitions. In this post I covered small scale code base projects that we have used to shape our current project; what I have not described is all the competing large projects we have interacted with. That is what I will discuss in Part II.

Part III will be on the design process, and the ongoing discussions revolved around what features people will want to see and play. This covers a lot of subject matter, which we have tried to push into the forums, but it currently seems difficult to get people engaged there. I had a good many lunch discussions with Peter, and Ron nearly read my mind in terms of his technology design. There is a use case document which still needs some work and it will be the first public draft ready for review and amendments.

I hope this material has really got you thinking! If this spins new projects, fantastic! If you want to contribute to our goal, you need only join the github and introduce yourself on our MetaSim subreddit. (/r/Simulate is more general purpose now.)

Think about what type of world you want to make, tell us, let’s figure out how to make it for you! We will produce procedural worlds and fill them with agents who live out procedural narratives, it will be a blast and I look forward to our journeys together. Cheers!

http://www.iontom.com/2013/05/05/sim-update-part1/ http://www.iontom.com/wp-content/uploads/2013/04/pic1.jpg Blog, Featured Home, Front Page, Gaming, Project, rSimulate, Simulation, Simulations, Strategy, aaron, ABM, API, automated agent, digital anthropology, gaming, manifesto, metasim, mr doob, parallel universe, procedural generation, procgen, procworld, programming, reddit, rsimulate, simulation, space, statement, terrain, three.js, webgl

No comments:

Post a Comment